Legislation Must Combat Social Media Giants

“These companies are at fault for what happens on their platforms, but they will not do anything to fix it as long as it makes them money. To see any sort of noticeable change, the government needs to enact stronger legislation restricting social media.”

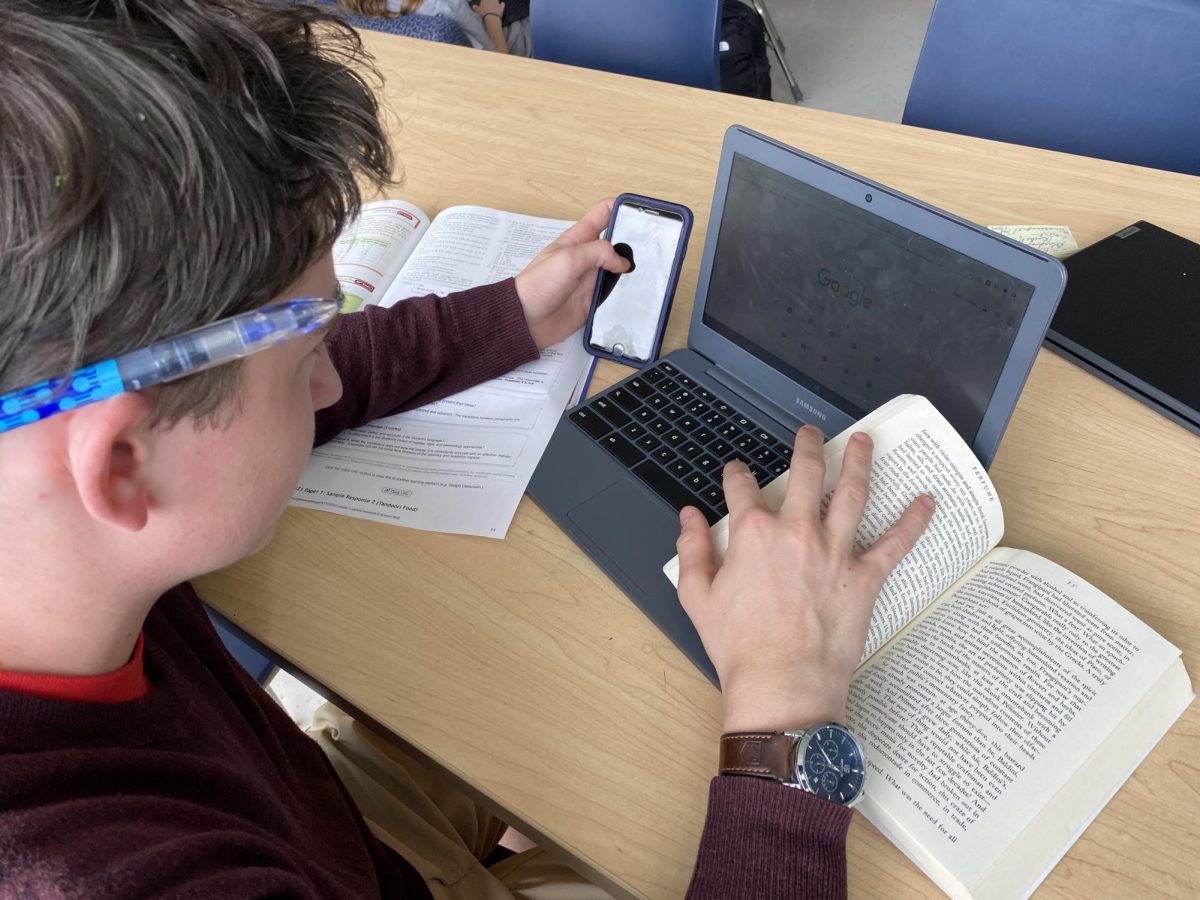

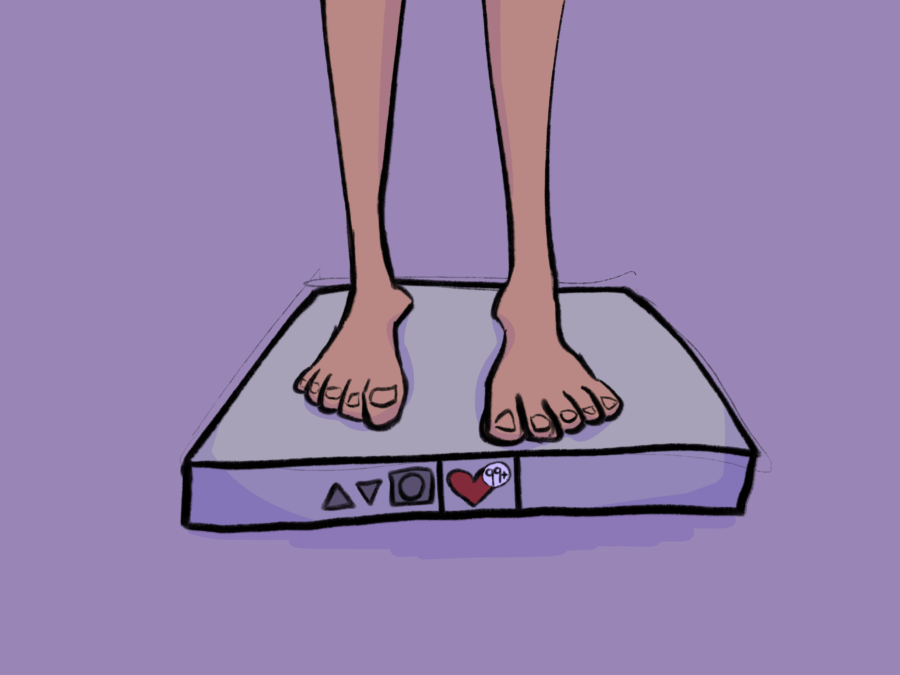

“We make body image issues worse for 1 in 3 teen girls,” states internal research done by Meta concerning one of its platforms, Instagram.

However, they don’t seem to care.

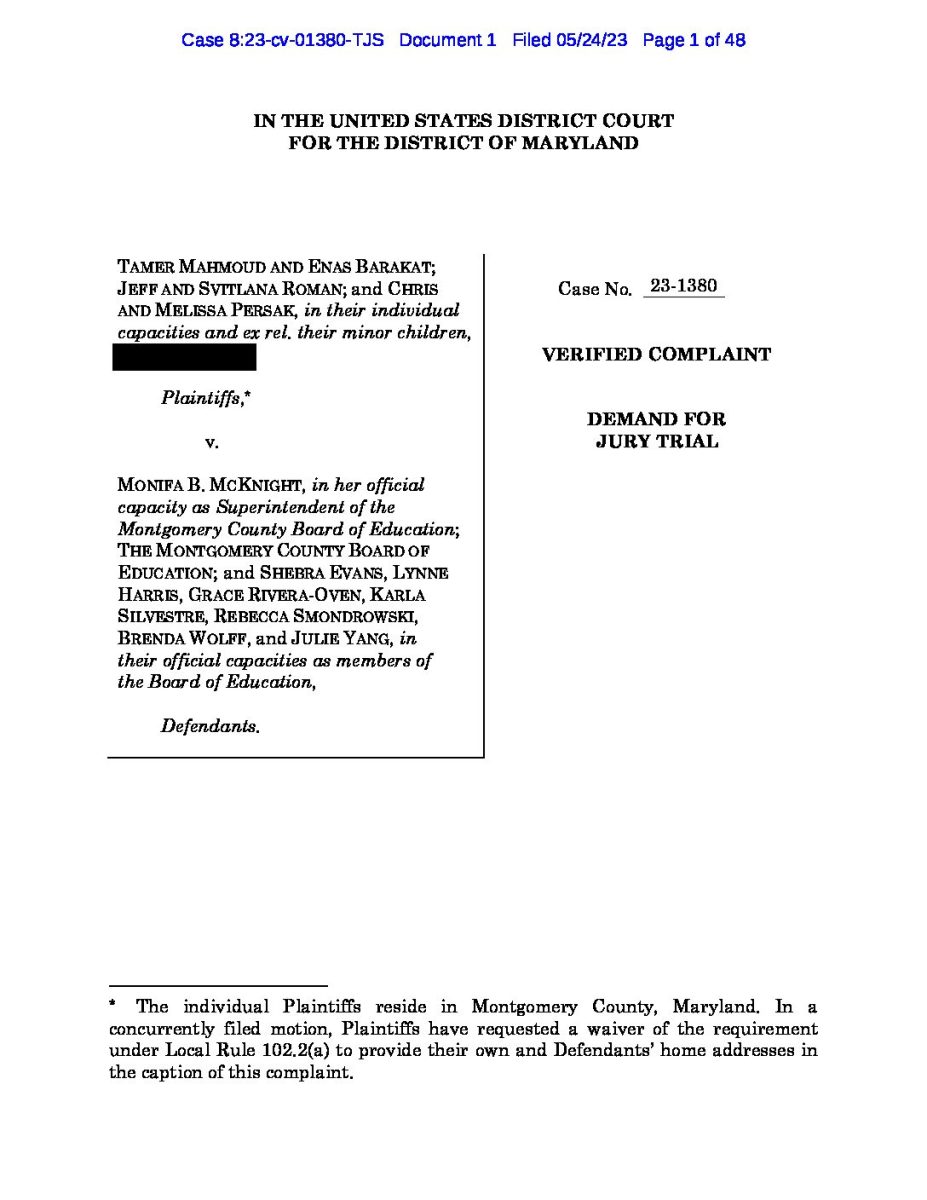

In recent years, tech giants like Facebook have faced a plethora of lawsuits relating to teens’ mental health, including Gonzalez v. Google, Twitter v. Taamneh, and over 1,2000 families filing lawsuits against TikTok, Snapchat, YouTube, Roblox and Meta. These cases have proved time and time again that social media companies are aware of the harm their platforms cause, yet they still fail to take any actions to prevent such harm.

“These companies are at fault for what happens on their platforms, but they will not do anything to fix it as long as it makes them money. To see any sort of noticeable change, the government needs to enact stronger legislation restricting social media,” said sophomore Sofia Lindholm.

Many people, including elected officials, have attempted to figure out a way to confront this issue and make the internet safer for its younger users, while still respecting the freedom of speech and not hindering the benefits provided by technology. Because of the lack of action taken by Congress, many states have begun proposing new laws that provide more protection to children using social media.

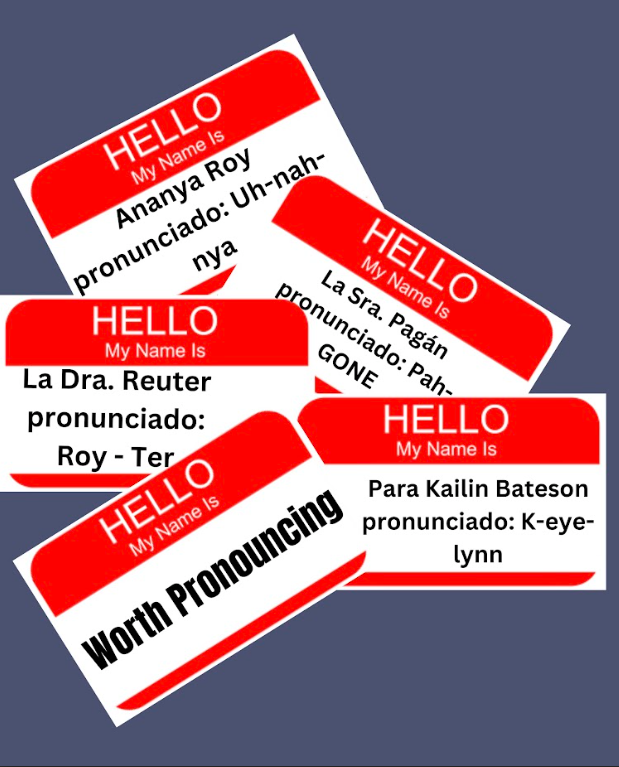

“States really need to do something about restricting social media for children even if companies are not taking action. The signs of its negative effects are already showing in this generation and the quicker they take action, the better it is,” said junior Ananya Roy, co-founder and leader of Barons Legal.

The idea of such legislation arose in October 2021 when former Facebook product manager Frances Haugen leaked a set of documents from the social media company’s internal social network. These documents contained presentations, research studies, discussion threads, and strategy memos revealing that Facebook (and its affiliate, Instagram) knowingly placed a higher value on its profits than the public good.

If enacted, Maryland’s House Bill 901/Senate Bill 844 would limit the autoplay function on videos for children, automatically set new accounts to private, prevent unknown adults from talking to children, and stop the tracking of children’s data on social media. Although state-level legislation aimed at regulating global online platforms may not be the ideal solution, it currently stands as the only viable source of legislative power. We must rely on such measures while we await more comprehensive action from Congress, including the passage of critical bills such as the Kids Online Safety Act.

“The Kids Online Safety Act sounds like an amazing idea, long overdue. Whether it gets passed or not I believe that the Kids Online Safety Act is moving us in the right direction, and will bring more attention to kids’ health on social media, which will eventually bring change,” said Quince Orchard junior, Devin Lee, co-founder of Social Media for Social Justice who testified as a witness in front of the Maryland State Legislature for a design code bill that would help keep kids safer online by restricting their actions on social media, along with changing the way tech companies would personalize kids’ algorithms.

The Kids Online Safety Act is a bipartisan bill that will require the option for social media users to turn off addictive features, such as autoplay or recommendations for other videos. Social media companies would also have to prevent the promotion of self-harm, suicide, eating disorders, substance abuse, sexual exploitation, and items illegal for minors. This act would also require the use of a third-party platform to review the app independently and evaluate its risk to minors along with adequate measures being put in place to prevent those risks. The Kids Online Safety Act failed to pass last Congress, but legislative aids are working on adjusting the act to get it reintroduced this term and acquire more sponsors.

As states enact legislation to address the rising concerns of social media and teens, more pressure will be put on Congress to address this issue. Social media companies need to be held accountable for the impact of their platforms and fix the issues within them. Improving these platforms will not come from the companies themselves, so the government needs to enact legislation like The Kids Online Safety Act to protect young and vulnerable children from the damaging effects of social media.

Sara Torres, a B-CC senior, is the head of our Spanish Section, Barones Bilingües. She loves listening to music, cows, and writing. Sara cannot wait to...

Claire Wang, a B-CC senior, serves as the Co-Director for The Tattler's Art Team and a contributing writer. She also has two dogs and two cats.